Applications & benchmarks

Here we illustrate FLINT's performance on various benchmarks and give examples of research problems that were solved with FLINT's help.

Arithmetic with huge numbers

Digits of pi

FLINT uses GMP and MPFR under the hood but notably also provides its own arbitrary-size integer and floating-point code which can be 2-10 times faster in many typical applications. A basic benchmark problem is to compute $\pi$ to 100 million digits.

| Implementation | Time |

|---|---|

| GMP (GMP-chudnovsky) | 62.1 s |

| MPFR (builtin function mpfr_const_pi) | 172.9 s |

| FLINT (builtin function arb_const_pi) | 31.6 s |

| FLINT (builtin function arb_const_pi) (8 threads) | 9.34 s |

Benchmark machine: 8-core AMD Ryzen 7 PRO 5850U CPU (Zen 3).

FLINT is about twice as fast as GMP and five times faster than MPFR on this benchmark. When allowed to use multiple threads, FLINT is 6.6x faster than GMP (which is single-threaded only) and 18x faster than MPFR. Note that due to the nature of the algorithm used to compute $\pi$, this benchmark heavily exercises numbers of all sizes, not just 100-million-digit numbers.

We mention that FLINT is about half as fast as the special-purpose, closed-source pi computing program y-cruncher (which does this in 18.3 s on a single core and in 4.45 s on 8 cores); however, FLINT can compute far more general mathematical objects.

Elliptic curve primality proving

As of May 2023, the largest number that has been certified prime using a primality test for numbers of general form (as opposed to special tests such as Lucas-Lehmer for Mersenne primes) is the 86453-digit repunit 11111...11111. This was achieved by Andreas Enge using the elliptic curve primality proving algorithm (ECPP) implemented in his CM software. CM uses FLINT for certain operations such as exponentiation in the ring $(\mathbf{Z}/n \mathbf{Z})[x] / f$ (done using FLINT's fmpz_mod_poly type) as part of the Cantor-Zassenhaus step.

FLINT itself currently includes provable primality tests such as APRCL suitable for smaller numbers (up to a few thousand digits) as well as probable prime tests such as Miller-Rabin and BPSW useful for numbers of any size.

Exact linear algebra

Integer matrix multiplication

Matrix multiplication is a common kernel for other operations. Here we compare timings to multiply two 100 × 100 matrices with b-bit integer entries.

| Language, integer type | Algorithm | $b = 10$ | $b = 100$ | $b = 1000$ |

|---|---|---|---|---|

| Julia, BigInt | Naive (built-in * for Array) | 0.224 | 0.356 | 0.542 |

| Python, int | Naive (@ for numpy.array) | 0.0375 | 0.0731 | 0.890 |

| Python, gmpy2.mpz | Naive (@ for numpy.array) | 0.0479 | 0.0537 | 0.192 |

| Pari/GP, t_INT | Naive ($n^3 \times$ (c += a * b)) | 0.211 | 0.219 | 0.369 |

| Pari/GP, t_INT | Optimized (built-in * for t_MAT) | 0.00147 | 0.00920 | 0.102 |

| GMP (C++ wrapper), mpz_class | Naive ($n^3 \times$ (c += a * b)) | 0.0287 | 0.0294 | 0.169 |

| GMP, mpz_t | Naive ($n^3 \times$ (mpz_addmul)) | 0.0122 | 0.0172 | 0.159 |

| FLINT, fmpz_t | Naive ($n^3 \times$ (fmpz_addmul)) | 0.00395 | 0.0213 | 0.163 |

| FLINT, fmpz_t | Optimized (fmpz_mat_mul), | 0.0000678 | 0.00235 | 0.0456 |

| FLINT, fmpz_t | Optimized (fmpz_mat_mul), 8 threads | 0.0000675 | 0.000800 | 0.0142 |

Timing results are given in seconds. Benchmark machine: 8-core AMD Ryzen 7 PRO 5850U CPU (Zen 3). Note: for this benchmark, we configured FLINT to use the BLAS backend for modular matrix multiplications.

The main takeway is that an optimized integer matrix multiplication algorithm such as that provided by FLINT's fmpz_mat_mul is much faster (sometimes by more than a factor 1000) than a naive loop doing $n^3$ big-integer multiplications and additions. For the matrices in this benchmark, FLINT's fmpz_mat_mul is using a multimodular algorithm. Pari/GP also uses a similar algorithm, but it is somewhat slower than FLINT.

If one implements the naive algorithm, FLINT's fmpz_t is about 3× faster than GMP's mpz_t with small entries (and at worst 20% slower for medium-size entries) due to using an inline representation for values that fit in a single machine word.

Univariate polynomial arithmetic

Tabulating Weil polynomials

Kiran S. Kedlaya reports:

My code in the Sage library for tabulating Weil polynomials makes extensive use of FLINT univariate polynomials over $\mathbf{Z}$, particularly to count real roots using Sturm-Habicht sequences. A concrete application appears in my three recent papers on the relative class number one problem for function fields [I, II, III], where I end up running this code at scale to tabulate millions of Weil polynomials.

Congruent numbers below one trillion

In 2009, FLINT was used together with zn_poly for a large power series computation to determine the congruent numbers up to $10^{12}$, a result which was widely reported in the press ("A Trillion Triangles").

Solving polynomial systems

FLINT integer arithmetic and univariate polynomial arithmetic is used in the msolve library for solving multivariate systems. For example, the authors write:

to tackle large degrees, we revisit asymptotically fast algorithms for Taylor shift (...) and implement them carefully using the FFT-based multiplication of FLINT for univariate polynomials with integer coefficients. This is a major difference with other implementations because of the (wrong) belief that asymptotically fast algorithms are useless in this context. This allows us to obtain a univariate solver which outperforms the state of the art on examples coming from our computations

Multivariate polynomial arithmetic

FLINT includes fast, multithreaded code for multivariate polynomials over $\mathbf{Z}$, $\mathbf{Q}$ and $\mathbf{Z}/n\mathbf{Z}$ and other coefficient rings. Some older (2019) benchmarks results are available (part 1, part 2) demonstrating favorable performance compared to Maple, Trip and Giac on both sparse and dense problems.

Reduction of Feynman integrals

FIRE is a program which performs integration-by-parts reduction of Feynman integrals. As of version 6, FIRE allows using FLINT as a backend for multivariate polynomial arithmetic. An example benchmark problem is the "three-loop banana diagram", about which the authors write:

We consider the unequal mass case, with four different internal masses $m_1, m_2, m_3, m_4$. The external mass is set to a numerical value, $p^2=1$. We reduce a tensor integral whose numerator is a dot product between the loop momentum for the $m_2$ line and the loop momentum for the $m_3$ line. The execution times are shown in Table 5. Unlike the previous benchmarks, this one has an unusually large number of kinematic scales. In this case, FLINT and Symbolica backends offer dramatic speedups, up to about 9 times, over the Fermat backend.

Simplifier Time (1000s) FLINT 0.62, 1.01 Symbolica 1.02, 1.66 Fermat 5.36, 9.23 Time taken for FIRE to perform the IBP reduction for the 3-loop banana diagram with unequal internal masses, with a rank-1 numerator, for three different simplifier backends. Every result is shown as two numbers, indicating the time measured on the two different machines.

Handling algebraic and exact numbers

Equality of two large expressions

Computer algebra systems often struggle to manipulate exact numbers like $\sqrt{2 + \sqrt{3}}$ when the expressions become large. FLINT can sometimes handle such problems more easily. In 2020 a SageMath user reported that SageMath did not finish in six hours when asked to check the equality $A = B$ of two algebraic numbers which arose in a matrix computation. The expressions for the numbers involve nested square roots and have about 7000 terms. The current version of FLINT proves this equality in four seconds.

Cutting squares into similar rectangles

Ian Henderson has a nice writeup about enumerating all ways to cut a square into $n$ similar rectangles. This problem was solved up to $n = 8$ with an exhaustive computer search using the exact real numbers in FLINT/Calcium to represent roots of polynomials.

Numerical computation

The ball arithmetic in FLINT (formerly the separate Arb library) is used in diverse applications (physics, biology, engineering, number theory, etc.) requiring extremely precise and reliable numerical computation.

Certified ODE solving and analytic continuation

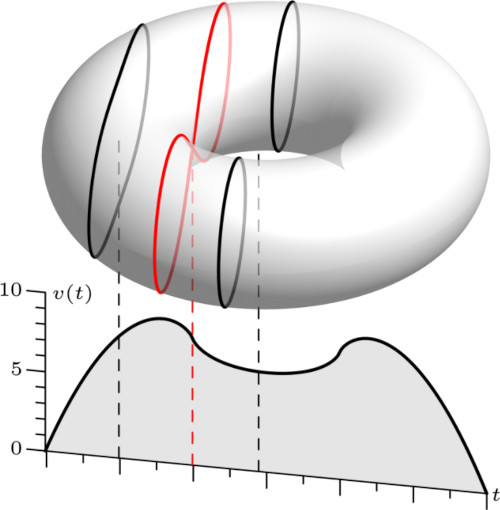

The ore_algebra package for SageMath includes tools developed by Marc Mezzarobba for computing rigorous arbitrary-precision solutions of holonomic ODEs and analytically continuing such solutions through the complex plane. FLINT/Arb is used for the underlying arithmetic with complex numbers and matrices, polynomials and power series. Applications include:

- the construction of a large database of quartic surfaces,

- simplification of differential operators for Feynman integrals,

- computing the volumes of semi-algebraic sets in $n$ dimensions (see illustration), and

- proving uniqueness of the Canham model for biomembranes.

Accurate Gaunt factors for quadrupole radiation

Josef Pradler and Lukas Semmelrock write in The Astrophysical Journal (2021) about computing accurate Gaunt factors for nonrelativistic quadrupole bremsstrahlung:

The calculation of hypergeometric functions of large imaginary arguments is a difficult task [...]. A particular strength of [Arb] is the rigorous computation of hypergeometric functions [...]; common software frameworks such as Mathematica appear not able to yield results for $|\nu_i| \gtrsim 100$ for all required function values in a reasonable amount of time

We use Arb to calculate the hypergeometric functions, Sommerfeld factors, and all coefficients; in short, the entire expression This is required, because, first, products such as $S_i S_f \times |{}_2F_1(i \nu_f, i \nu_i; 1; z)|^2$ can be of the form $huge \times tiny$, and second, because of the occurrence of cancellations in the final linear combination of these terms. Because of factors $\exp(\pm \nu_{i,f})$ contained in $S_{i,f}$, it turns out that the required precision is approximately $|\nu_{i,f}|$. In our tabulation we therefore evaluate the ingredients with up to $10^4$ digits of precision.

The ternary Goldbach conjecture

FLINT/Arb has been used for certain computations in Harald Helfgott's solution of the ternary Goldbach problem, for instance to establish precise bounds for integrals involving the Riemann zeta function in the complex plane. The computations are described in more detail in Helfgott's book and on his website.

Figure: a short subsegment of one of Helfgott's integrals: $\int_{-\tfrac{1}{4}+8i}^{-\tfrac{1}{4}+40000i} \left|\frac{F_{19}(s+\tfrac{1}{2}) F_{19}(s+1)}{s^2}\right| |ds|$, where $F_N(s) = \zeta(s) \prod_{p\leq N} (1 - p^{-s})$.

The Riemann hypothesis

The highest verification of the Riemann hypothesis to date was done in 2020 by Dave Platt and Trim Trudgian, reaching height $3 \cdot 10^{12}$. Parts of their computations used FLINT/Arb ball arithmetic

[...] in place of full interval arithmetic whence there is a space saving of roughly 50%, which make applications more cache friendly.

The de Bruijn-Newman constant

FLINT/Arb was used in a large distributed computation as part of the "polymath" project to bound the de Bruijn-Newman constant. A contributor to that effort wrote:

Very impressed by the robustness of your software as well as by the tremendous speed gains that it has brought us (up till a factor 20-30 faster than Pari/GP). So far we haven't encountered a single bug in the software. Well done!

Traveling wave solutions of PDEs

Joel Dahne and Javier Gómez-Serrano have proved the existence of a periodic highest, cusped, traveling wave solution for the Burgers-Hilbert equation, and more recently also for the fractional KdV equation. The interval computations in the proofs took 10,000 CPU hours to run on a 384-core cluster, employing power series arithmetic and special functions provided by FLINT/Arb. The authors write:

These bounds are highly non-trivial, in particular $\delta_{0}$ is given by the supremum of a function on the interval $[0, \pi]$ which attains its maximum around $10^{-5000}$. [...] Note that these numbers are extremely small, too small to be represented even in standard quadruple precision binary128, though Arb has no problem handling them.